For any Texas business in a weather-sensitive industry, the daily forecast isn't just a number—it’s a critical operational tool.

Whether you're managing an energy grid in Houston, scheduling a construction project in Dallas, or coordinating logistics across the state, your success hinges on accurate weather intelligence. But what really powers those predictions? The answer is the weather computer model, the sophisticated engine driving modern meteorology and a cornerstone of effective risk management.

The Engine Behind Your Business Forecast

Think of a weather computer model as a digital twin of the Earth's atmosphere. These incredibly complex programs run on supercomputers, processing millions of data points from satellite observations, ground-level sensors, and more to simulate what the atmosphere will do next.

It takes a snapshot of current conditions—temperature, pressure, humidity, and wind—and uses powerful physics equations to project how those conditions will evolve over time. This process is what transforms raw data into actionable intelligence for your business.

From Data Points to Strategic Decisions

The output from a weather computer model is much more than just a high temperature for the day. For Texas business leaders, it provides the essential insights that inform real-world risk management strategies. This technology is fundamental for:

- Protecting Assets: Anticipating the path and intensity of a hurricane gives petrochemical facilities along the Gulf Coast the time needed to initiate shutdown procedures safely, mitigating potentially catastrophic damage. For example, a 72-hour forecast lead time can be the difference between a controlled shutdown and a forced, high-risk emergency response.

- Securing Supply Chains: High-resolution precipitation forecasts help logistics companies reroute fleets to avoid flash floods, preventing costly delays and ensuring driver safety. A 2% improvement in routing efficiency due to weather avoidance can translate into millions in annual savings for large operators.

- Ensuring Operational Continuity: Accurate long-range temperature outlooks enable energy providers to forecast demand spikes during a summer heatwave, helping to prevent grid strain and comply with ERCOT reliability standards.

These powerful simulations are the foundation for the forecasts you use every day. While a standard weather app gives a simplified view, understanding the underlying model data can offer a significant competitive advantage. For businesses looking to integrate these data streams directly, exploring options like the Google Weather API can be a crucial step in building a custom risk management dashboard.

Disclaimer: The information provided by ClimateRiskNow is for educational purposes to help businesses understand and manage climate-related risks. ClimateRiskNow does not sell insurance or provide financial advice. Our goal is to empower Texas industries with data-driven insights for making informed operational decisions.

Ultimately, understanding what a weather computer model is—and what it can do—is the first step toward using this technology for smarter, more resilient business planning in the face of Texas's dynamic and often extreme weather.

From Equations to ENIAC: The Birth of the Digital Forecast

To trust the sophisticated forecasts we rely on today, it helps to know their scientific origins. Modern weather computer models weren't invented overnight; they're the culmination of more than a century of work to translate the chaotic physics of our atmosphere into the orderly language of mathematics. This quest started long before the first computer whirred to life, born from the idea that weather wasn't random—it was just an incredibly complex physics problem waiting to be solved.

The core concept, known as Numerical Weather Prediction (NWP), emerged in the early 20th century. The theory was straightforward: if you could capture a snapshot of the atmosphere's current state—its temperature, pressure, wind, and humidity—you could use the fundamental laws of physics to calculate what it would do next.

The problem? The sheer number of calculations was staggering and impossible to perform by hand in any useful amount of time. For decades, NWP remained a brilliant but theoretical concept, a classic case of science being years ahead of the available technology.

When Computation Finally Caught Up

The game changed with the arrival of the first electronic computers. These massive machines provided the missing piece of the puzzle: the raw processing power needed to crunch the atmospheric equations fast enough to be useful. The theory of NWP was finally ready for its first real-world test.

That moment came in 1950 in a landmark experiment. A team of meteorologists ran the first-ever successful numerical forecast on the ENIAC, one of the world's earliest general-purpose computers. The simulation, which focused on weather patterns over North America, took the machine nearly 24 hours to produce a single 24-hour forecast. It was painfully slow by today's standards, but it was a monumental success. It proved that the physics of the atmosphere could be calculated to predict the weather.

This achievement marked the turning point for meteorology. It shifted the field from one based almost entirely on observation and experience to a hard, data-driven predictive science. The weather, once seen as an uncontrollable force, was now fundamentally a problem of physics and computation.

From ENIAC to Enterprise-Level Planning

The legacy of that first ENIAC run is in every forecast that guides a critical business decision today. Those early, slow calculations have evolved into the incredibly powerful global and regional models that drive modern weather intelligence.

For business leaders in Texas, this history is more than trivia. The ability to see a hurricane developing days in advance hinges on the very same physical principles tested on the ENIAC. The journey from abstract equations to the room-sized ENIAC, and now to the supercomputers running today’s weather models, highlights the scientific rigor behind the forecasts your operations depend on. Whether you're balancing the power grid during a brutal heatwave or scheduling a construction project around a severe storm threat, you're leveraging a century of progress to protect your assets and maintain operational continuity.

Building a Digital Atmosphere Step by Step

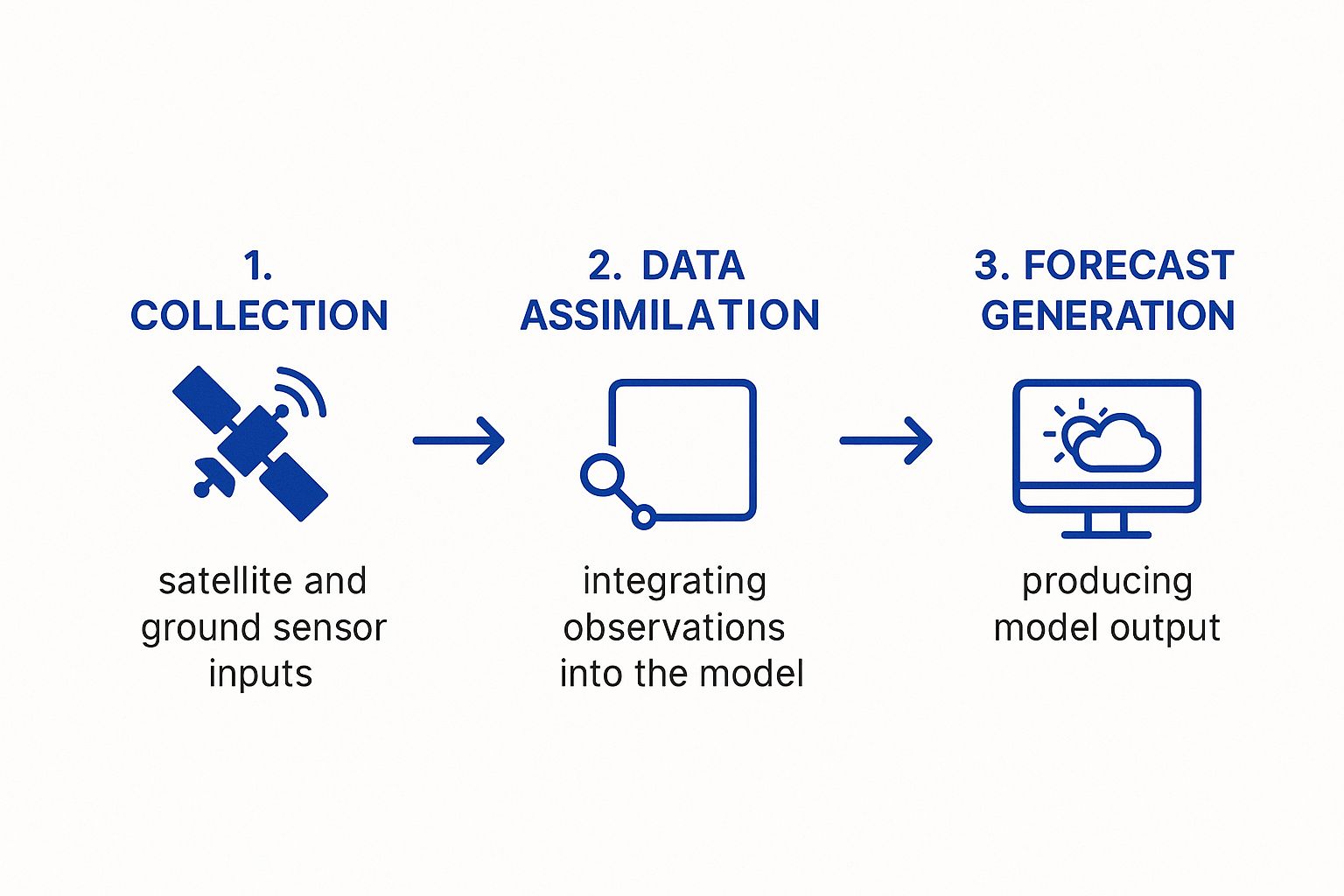

To make sound operational decisions based on a forecast, you need to understand not just what a weather computer model predicts, but how it arrives at that prediction. The process can be broken down into three critical stages, starting with a massive data-handling challenge: collecting an enormous volume of real-time observations from around the globe. This information is the raw material, the essential starting point for any reliable forecast.

Step 1: Data Assimilation

The first stage, Data Assimilation, is about feeding the model its initial picture of the real world. Supercomputers pull in millions of data points from a vast global network to create a comprehensive, instantaneous snapshot of the entire atmosphere.

This raw information comes from:

- Satellite Observations: Capturing cloud cover, surface temperatures, and atmospheric moisture from space.

- Ground Stations: Measuring temperature, pressure, wind speed, and humidity at thousands of locations, including numerous sites across Texas monitored by agencies like the National Weather Service (NWS).

- Weather Balloons: Sending back data on conditions at different altitudes as they rise through the atmosphere.

- Buoys and Ships: Reporting on oceanic conditions, which are a primary driver of our weather patterns.

This isn't just data collection; it’s an intelligent blending of these observations into a cohesive, three-dimensional grid that represents the current state of the atmosphere. The quality of this initial snapshot is critical—any errors or gaps here will amplify as the forecast runs.

The infographic below shows how this foundational process works, from collecting raw data to producing the final forecast.

As you can see, a collection of disconnected data points is systematically organized and processed to create the actionable intelligence your business relies on for risk management.

Step 2: The Simulation Engine

Once the initial atmospheric state is locked in, the Simulation Engine takes over. This is the heart of the weather model, where incredible computing power is used to solve a set of complex mathematical equations governing the physics of the atmosphere, such as fluid dynamics and thermodynamics.

The supercomputer calculates how the conditions in each grid cell of the digital atmosphere will interact with its neighbors over a short period, often just a few minutes. It then repeats this process millions of times, advancing the simulation step-by-step into the future. This iterative number-crunching generates predictions for hours, days, and even weeks ahead.

The scale of this task is immense. A single forecast can involve trillions of calculations per second. This is why advances in supercomputing power lead directly to more accurate forecasts that can resolve smaller, localized weather events—a critical factor for Texas industries.

For a logistics manager, the precision of this engine determines how reliably a forecast can time a line of severe thunderstorms, allowing fleets to be rerouted ahead of time. For an agricultural operator in the Texas Panhandle, its accuracy is critical for the rainfall predictions that drive irrigation and planting schedules.

Step 3: Output Generation

The final stage is Output Generation. The raw data from the simulation engine is a massive collection of numbers, largely unusable for non-specialists. This step translates that raw data into useful forecast products that can guide business decisions.

This translation process creates:

- Familiar Weather Maps: Visualizing pressure systems, fronts, and expected precipitation.

- Specific Data Points: Providing hourly temperature, wind speed, and precipitation probability for a precise location, like a construction site or energy facility.

- Risk Metrics: Calculating values like heat index, wind chill, or the probability of severe weather like hail or tornadoes, which are directly relevant to OSHA regulations and worker safety protocols.

This final step makes the complex science of a weather model directly applicable to business operations. By understanding these three stages—Data Assimilation, Simulation, and Output Generation—business leaders in Texas can better appreciate the strengths and limitations of any forecast, building confidence and empowering more informed, data-driven decisions when managing weather-related risks.

A Guide to Key Weather Models for Texas Industries

If you’ve ever looked at two different weather apps and seen two different forecasts, you’ve witnessed the world of weather computer models in action. That difference isn't a sign of bad science—it’s the result of different tools designed for different purposes.

For any Texas business, understanding that not all forecasts are created equal is the first step toward better risk management. Each forecast is powered by a unique weather computer model with its own method for analyzing the atmosphere, giving it specific strengths and limitations. Knowing why these forecasts diverge is critical. It’s the difference between scheduling a major concrete pour at the right time in Austin or preparing a Gulf Coast facility for a hurricane threat that one model sees and another downplays.

The Global Titans: GFS and ECMWF

On the world stage, two models are the heavyweights that drive nearly all long-range forecasting: the American GFS and the European ECMWF. Think of them as providing the foundational, big-picture guidance that countless other forecasts are built upon.

The GFS (Global Forecast System): Operated by the U.S. National Weather Service (NWS), this is the workhorse of American weather forecasting. It runs four times a day, and its data is publicly available, which is why it powers so many weather apps. Its biggest advantage is that fresh guidance is available every six hours.

The ECMWF (European Centre for Medium-Range Weather Forecasts): Known in the industry as the "Euro model," the ECMWF has a well-earned reputation for being one of the most accurate global models, especially in the 3-10 day forecast range. Its data is proprietary, but its track record for predicting the path of major systems like hurricanes is second to none. It updates twice a day.

A logistics company might lean on the GFS for frequent updates on cross-country routes. But for a petrochemical plant monitoring a potential hurricane a week out, the ECMWF’s long-range accuracy is often the more trusted guide for making critical, early-stage preparations.

High-Resolution Models for Local Threats

Global models paint with a broad brush. To see the finer details—like exactly where and when thunderstorms will form—forecasters turn to high-resolution models that focus on smaller areas like North America.

- The NAM (North American Mesoscale Model): For short-range forecasting in the U.S., the NAM is an essential tool. It updates every six hours and produces forecasts in three-hour increments out to 84 hours. Its sharper resolution allows it to better pinpoint phenomena like thunderstorm complexes or the precise timing of a cold front sweeping through Texas.

A construction manager in Dallas relies on this kind of detail. The GFS might just say "afternoon thunderstorms," but the NAM can provide a much better idea of whether that threat is for 2 PM or 5 PM—a crucial distinction that can save a project from costly delays and material loss.

To help clarify these differences, here’s a quick breakdown of the major models relevant to Texas operations.

Comparison of Major Weather Computer Models

This table highlights the key characteristics of the primary weather models used for forecasting in North America, helping decision-makers understand their different strengths and applications.

| Model Name | Operating Agency | Geographic Coverage | Primary Strength | Typical Use Case for Texas Business |

|---|---|---|---|---|

| GFS | National Weather Service (NWS) | Global | High update frequency (4x/day) and public data access | Short-term logistics and daily operational planning |

| ECMWF | European Centre for Medium-Range Weather Forecasts | Global | High accuracy in medium-range (3-10 day) forecasts | Long-lead-time planning for major events like hurricanes |

| NAM | National Weather Service (NWS) | North America | High resolution for detailed short-range (0-84 hr) forecasts | Pinpointing timing of fronts, thunderstorms, and local winds |

Each model serves a purpose, and understanding their individual roles is key to building a complete weather intelligence picture.

Why Model Differences Matter for Your Business

Choosing which model to trust has direct operational and financial consequences. The complex physics and data each model uses means one might predict a crippling ice storm for North Texas while another shows only a cold rain.

This is why professionals use an ensemble approach—looking at multiple models at once. If the GFS, ECMWF, and NAM all point to the same outcome, confidence is high. If they show wildly different scenarios, it’s a clear signal of uncertainty that calls for contingency planning. This multi-model strategy is fundamental to turning raw weather data into real business intelligence. Pairing this with a deep analysis of historical weather patterns, such as our breakdown of NOAA data for Texas facilities, creates a powerful, forward-looking view of your operational risk.

Using Climate Models for Long-Term Strategy

A weather computer model is exceptional for forecasting operational conditions over the next seven to ten days. But for a business leader in Texas, strategic planning also means assessing risks that emerge over years and decades. For that long-range view, we turn to a different but related tool: the climate model.

Distinguishing between the two is key to building a business that can withstand both immediate and long-term environmental threats.

Here's the simplest way to differentiate them: Weather models forecast a specific event—like a thunderstorm hitting Dallas next Tuesday. Climate models, on the other hand, project the underlying trend, like the increasing frequency of severe droughts in Central Texas over the next thirty years. Both are essential, but they answer very different questions.

Climate models evolved from the same science as their weather-focused counterparts. They are a direct outgrowth of numerical weather prediction techniques, first taking shape in the mid-1950s. A scientist named Norman Phillips developed the very first general circulation model (GCM) in 1956, a groundbreaking effort to simulate climate dynamics over large areas and long timeframes. Though primitive by today's standards, it proved that modeling the entire climate system with a computer was possible. You can explore the long history of climate models to see how that foundation was built.

From Daily Operations to Decadal Planning

For any Texas industry, using the right model for the right timeframe is a strategic necessity. A logistics firm uses a short-term weather model to reroute trucks around a forecasted ice storm. That same company should use climate model projections to decide where to build its next major distribution hub, avoiding areas with escalating flood or wildfire risks.

These long-range simulations are built to answer the big, strategic questions that define the future viability of a business:

- Site Selection: Does it make sense to build a new manufacturing plant on the coast where sea-level rise is projected to increase storm surge risk by 20% over the facility's lifespan?

- Resource Management: How should an agricultural business in the Panhandle plan its long-term water strategy based on projections of more frequent and intense droughts, and what are the implications for compliance with local groundwater conservation district regulations?

- Infrastructure Investment: Should an energy company begin hardening its grid infrastructure in West Texas to handle a projected increase in extreme heat days, thereby reducing the risk of costly outages and regulatory scrutiny?

By simulating atmospheric and oceanic systems over decades, climate models reveal the slow-moving but powerful trends that can fundamentally alter a company's operational landscape. They provide the data-driven foresight needed for capital-intensive decisions with long-term consequences.

Applying Climate Insights to Texas Industries

The insights from climate models have direct, practical applications for managing risk across key Texas sectors. A petrochemical company on the Gulf Coast must factor in projections for increased hurricane intensity when planning infrastructure upgrades to meet both safety standards and operational goals.

Likewise, construction firms must consider future heat extremes that could affect worker safety protocols under OSHA and the performance of building materials over time. These forward-looking analyses are now a core part of modern risk management. Putting this data to work is much easier with dedicated platforms that translate complex scientific projections into clear business intelligence. Our guide to climate risk assessment tools takes a deeper dive into how businesses can tap into these powerful resources.

Ultimately, weather models keep your business safe today. Climate models ensure it’s viable and profitable tomorrow. Mastering both is essential for navigating the full spectrum of environmental risks in Texas.

Putting Weather Intelligence into Action

Understanding the science behind a weather computer model is one thing; translating its complex data into a real-world competitive advantage is another. For business leaders in Texas, the true value comes from weaving this intelligence directly into daily operational strategy. The goal isn't just to react to a forecast—it's to proactively make data-driven decisions that reduce downtime, enhance safety, and protect the bottom line.

This means turning model outputs—like precipitation probabilities or wind speed forecasts—into specific, predefined triggers for your teams. It’s about building a framework where weather data is a core component of risk management and daily planning.

From Model Data to On-the-Ground Decisions

The key is to connect specific weather model outputs to specific business actions. Consider a logistics company running routes through the Dallas-Fort Worth area. They can use high-resolution models to do more than just see a chance of hail. They can build an automated alert that is triggered when a model’s hail probability climbs above a certain threshold—say, 60%—along a planned route.

That trigger sets a predefined plan in motion:

- Immediate Rerouting: The system automatically identifies the safest and most efficient alternate route for any trucks heading into that high-risk zone.

- Driver Communication: Drivers receive an instant notification about the change and the reason for it, protecting both them and their cargo.

- Customer Updates: The system can even send a proactive notification to customers about a potential slight delay required for a safety diversion.

This proactive approach, driven by model data, turns a potentially devastating and costly event into a manageable operational adjustment. It prevents millions in cargo damage, steers drivers away from dangerous conditions, and keeps the supply chain moving.

Disclaimer: The information provided here is for educational purposes to illustrate risk management concepts. It is not intended as financial, insurance, or operational advice. ClimateRiskNow does not sell insurance or related financial products.

Industry-Specific Applications in Texas

These same principles apply across every weather-sensitive Texas industry, each with its own unique vulnerabilities. A sophisticated weather computer model provides the foresight needed to act with confidence.

Construction in Austin: A construction firm can use reliable precipitation outlooks from short-range models to schedule critical concrete pours. Pouring a slab right before a heavy downpour can ruin the entire job, causing significant financial losses and project delays. By setting a rule—for example, "No major pours if the 6-hour precipitation probability is over 30%"—they sidestep these costly mistakes and keep projects on schedule. Knowing how to interpret these forecasts is key, and our guide on how to read a weather report can give your team deeper insights.

Energy in Houston: An energy provider can anticipate demand spikes by monitoring medium-range temperature models. When a forecast shows a heatwave with temperatures 5-7°F above normal for three straight days, it can trigger protocols to bring more power generation online. This prevents grid strain, reduces the risk of brownouts, and ensures compliance with ERCOT's operational standards during peak demand. This strategic foresight enables smoother, more cost-effective load management than scrambling to react in real-time.

By building these direct links between model outputs and operational plans, Texas businesses can systematically de-risk their operations. This strategic use of weather intelligence is no longer a niche advantage; it is becoming a standard practice for building a resilient, competitive business in a state defined by its dynamic weather.

Your Questions on Weather Models, Answered

If you've ever felt you're getting mixed signals from different weather forecasts, you're not alone. It's a common challenge for business leaders trying to make critical decisions. Why does one source predict a calm week while another warns of a major storm? The answer almost always comes down to the weather computer model being used.

Let’s break down the most common questions we hear from Texas decision-makers. Understanding these concepts is the first step toward using weather intelligence as a strategic tool, not just a daily report.

Why Do Forecasts From Different Sources Disagree?

The simple answer is that different news outlets or apps are often using different playbooks. One might pull data from the American GFS model, while another uses the European ECMWF. Each of these models is a completely separate, complex system. They start with slightly different data, use unique physics equations, and process everything at different resolutions.

These differences can lead to different outcomes, especially for forecasts more than a few days out. This is why professional meteorologists never rely on a single model. Instead, they use an "ensemble" approach, comparing the outputs of multiple models to see where they agree and disagree. This helps them understand not just the most likely weather scenario, but also the degree of uncertainty in the forecast. For managing business risk, that range of possibilities is often far more valuable than a single, potentially misleading prediction.

How Far in Advance Can We Reliably Predict the Weather?

Generally, a modern weather computer model provides a highly reliable, detailed forecast for about seven to ten days out. After that point, the accuracy drops off quickly.

The reason is the chaotic nature of the atmosphere itself. Tiny, imperceptible errors in the initial data can compound and grow exponentially over time, a concept often called the "butterfly effect." This is why long-range outlooks do not predict a specific thunderstorm on a specific day three weeks from now. Instead, they focus on broad trends, such as whether a month is projected to be "hotter and drier than average."

For Texas businesses, this means you can plan your next week's operations with a high degree of confidence based on detailed model guidance. For anything further out, the strategy should shift from specific planning to broader risk assessment based on seasonal outlooks and historical climate data.

What Is an Ensemble Model and Why Is It Important?

Think of a standard model run—what we call a "deterministic" forecast—as providing just one single answer. It predicts one specific outcome. An ensemble model is a completely different approach.

Instead of running the weather computer model once, it is run dozens of times. For each run, the initial starting conditions—the temperature, pressure, and wind data—are slightly tweaked. This process doesn't produce one future; it produces a whole range of possible futures.

The result is powerful. It allows us to see the probability of an event happening and how confident we should be in the overall forecast. From a risk management perspective, this is a game-changer. Ensembles give you a clear picture of the full spectrum of possibilities, from the best-case to the worst-case scenario, which is exactly the kind of intelligence you need to make sound strategic decisions.

At ClimateRiskNow, our mission is to translate complex meteorological data into clear, actionable risk intelligence. Our assessments provide Texas businesses with the site-specific insights needed to protect assets, maintain operations, and build resilience against the weather threats that impact their bottom line.

See how our analysis can safeguard your operations. Request a demo with our team.